Hello! My name is Alessandro, I'm a Master's student in Interactive Media Technology at KTH Royal Institute of Technology and a research engineer in neurosurgery-applied Mixed Reality at karolinska University Hospital.

- info

- people

- attachment close

Knocking sounds are highly expressive. In our previous research we have shown that from the sound of knocking actions alone a person can differentiate between different basic emotional states. In media productions, such as film and games, knocks can be very important storytelling de- vices as they allow the story to transition from one part to another. Research has shown that colours can affect our perception of emotions. However the relationship between colours and emotions is complex and dependent on mul- tiple factors. In this study we investigate how the visual characteristics of a door, more specifically its colour, tex- ture and material, presented together with emotionally ex- pressive knocking actions, can affect the perception of the overall emotion evoked in the audience. Results show that the doorÀs visual characteristics have little effect on the overall perception of emotions, which remains dominated by the emotions expressed by the knocking sounds.

- Alessandro Iop

- Sandra Pauletto

- info

- people

- attachment close

- Alessandro Iop

- Jonathan Geffen

- Gabriel Carrasco Ringmar

- Karin Lagrelius

- info

- people

- attachment close

- Alessandro Iop

- Federico Jimenez Villalonga

- Gabriel Carrasco Ringmar

- Siyuan Su

- Annusyirvan Fatoni

- info

- people

- attachment close

Knocking sounds are highly meaningful everyday sounds. There exist many ways of knocking, expressing important information about the state of the person knocking and their relationship with the other side of the door. In media production, knocking sounds are important storytelling devices: they allow transitions to new scenes and create expectations in the audience. Despite this important role, knocking sounds have rarely been the focus of research. In this study, we create a data set of knocking actions performed with different emotional intentions. We then verify, through a listening test, whether these emotional intentions are perceived through listening to sound alone. Finally, we perform an acoustic analysis of the experimental data set to identify whether emotion-specific acoustic patterns emerge. The results show that emotional intentions are correctly perceived for some emotions. Additionally, the emerging emotion-specific acoustic patterns confirm, at least in part, findings from previous research in speech and music performance.

- Alessandro Iop

- Malcolm Houel

- Alfred Berg

- Abhilash Arun

- Sandra Pauletto

- Adrián Barahona-Ríos

- info

- people

- attachment close

In this project, we designed, developed and evaluated a interactive VR visualization of the recorded activity of a spiking neural network, provided by the Computational Neuroscience division of KTH's School of Electrical Engineering and Computer Science (EECS). The offline, desktop-based application is an improved version of an existing project presented by Vincent Wong at the 201() C-Awards, deployed on the HTC VIVE platform. It displays an abstract, animated and sonified representation of different structures in the neural network – namely pyramid cells, basket cells, minicolumns and hypercolumns – along with their activations over time. Redundant controls for navigation, triggering of different views and playback control are provided via the VIVE controllers and keyboard. Development was done in Unity using SteamVR and Steam Audio, and the subsequent user evaluation involved subject matter experts (SMEs) as well as students from the Information Visualization class of 2020.

- Alessandro Iop

- Harsh Kakroo

- Alfred Berg

- Fabio Cassisa

- info

- people

- attachment close

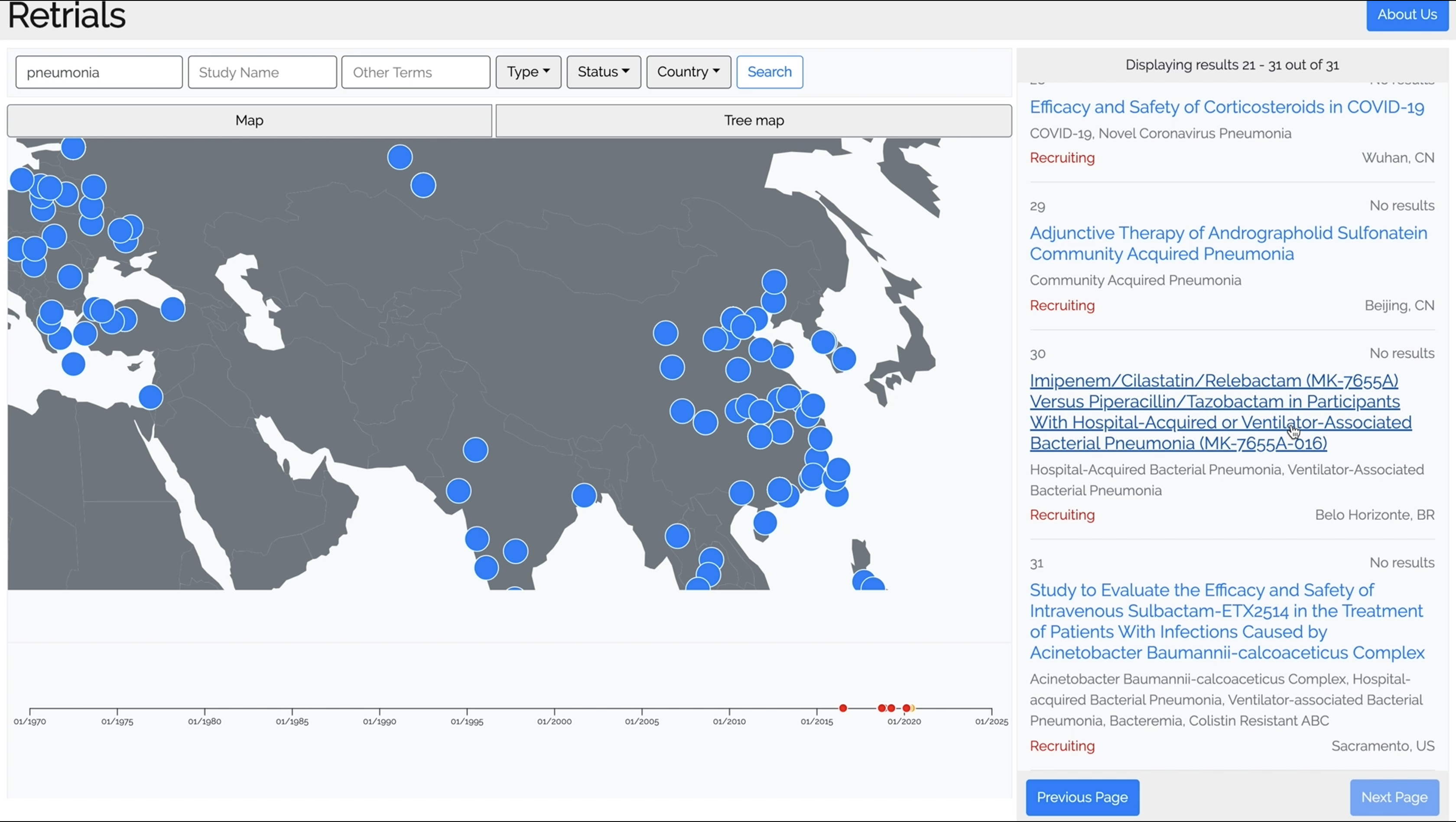

In this project, we created an interactive visualization, accessible through a web browser, to research all the clinical trials currently registered in the ClinicalTrials.gov website. The users it addresses – doctors as well as researchers and patients – can freely explore the dataset by navigating to and, interacting with, one of the two views provided. The first one is a geographical map, on which all results obtained after querying the database via a form are overlayed as colored dots on the position where the clinical trials are being carried; it also includes a zoomable timeline with the starting dates of all the results provided. The second view is a hierarchical treemap that enables exploration of the classification of all diseases and conditions made by ClinicalTrials.gov that allows users to assess a first level of similarity between nodes. Results from querying the database through the form, as for the map view, are presented with more details as a scrollable and paginated list on the right side of the web page.

- Alessandro Iop

- Omar Alabbasi

- Alfred Berg

- John Castronuovo

- Federico Jimenez Villalonga

- info

- people

- attachment close

In this project, we developed a simple Android application using Android Studio, with the aim of evaluating how different native widgets performed when a tactile feedback was applied to the interaction and ultimately elaborate different guidelines for developers. Specifically, Button, Seekbar, Checkbox, Switch, Spinner and Ratingbar were considered under three distinct aspects: "natural-ness", effectiveness and attention grabbed during the interaction. An online survey was built for the evaluation, and responses (in two languages, English and Italian) were collected and analysed statistically. Eight tips for developing an Android UI were presented.

- Alessandro Iop

- Federico Landorno

- info

- people

- attachment close

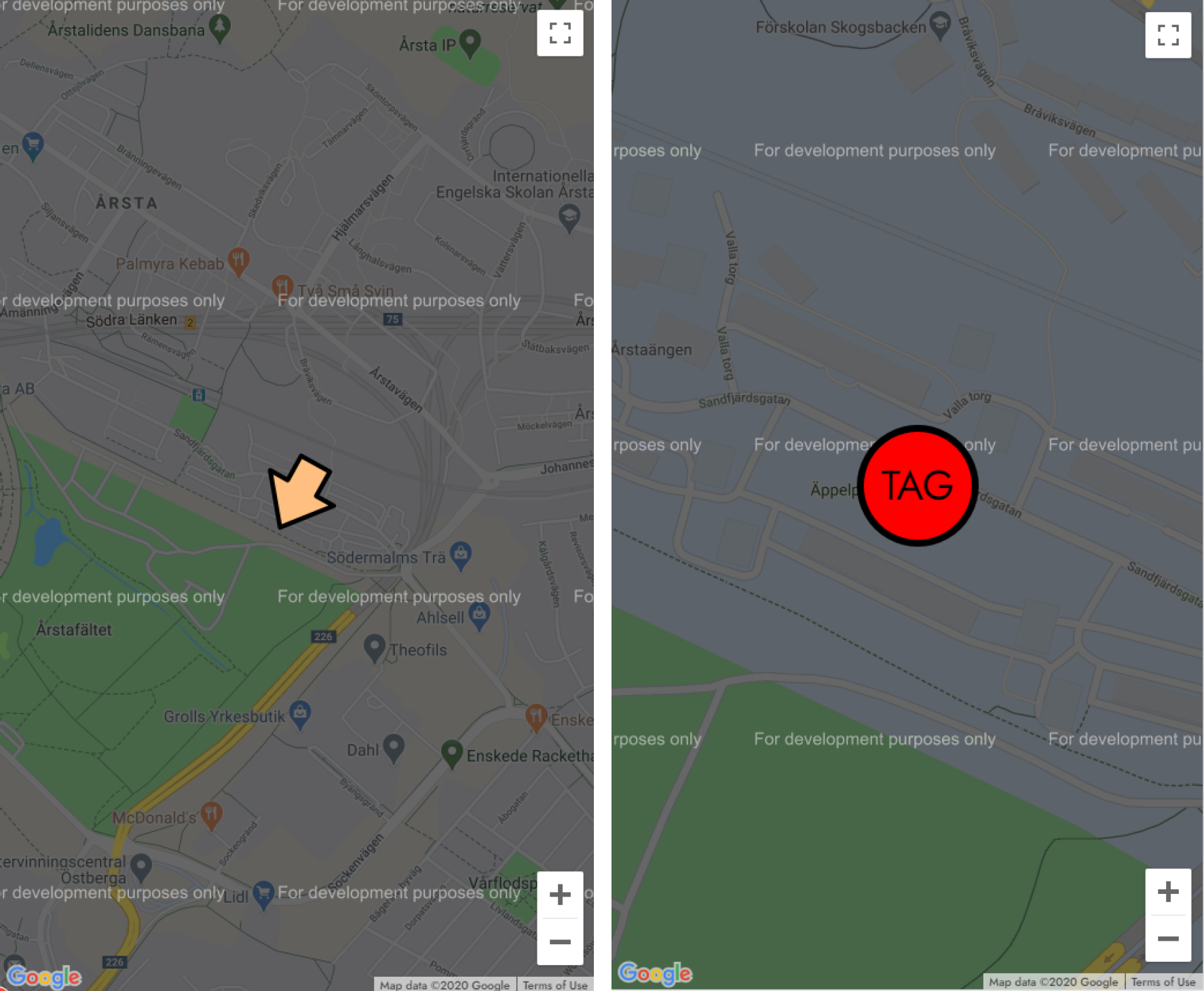

In this project, a mobile version of the popular game of tag was developed as a hybrid web app, comprising of a login system (though Google OAuth), a lobby which users can enter/exit, a game environment involving simple mechanics. The latter, in particular, displays a fullscreen map centered on the user's position, along with an arrow icon pointing to the target player, whose color changes according to the distance between user and target. When the target player is close enough, the arrow is replaced by a circular "tag" button which, when pressed, eliminates the target player from the game and redirects them to the lobby. The last player standing wins. The project was developed with React.js and Onsen UI at the front-end and Firebase (Firestore + Firebase Authentication) at the back-end; Google Maps API for the game map and positioning as well as PubNub for real-time position updates were also employed.

- Alessandro Iop

- Hannes Runelöv

- Ramtin Erfani

- Oscar Rosquist

- info

- people

- attachment close

In this project, a system comprising of three hexagonal decorative lights was produced usinf rapid prototyping techniques presented in the course – namely laser cutting, milling, water jetting and 3D printing. A single light consists of a wooden base to which a LED strip is attached, an opaque acrylic panel as a cover, a metal cylinder to provide support to it. A PLA box was 3D printed to contain the Arduino UNO board that controls all the LED strips for the lights.

- Alessandro Iop

- Harsh Kakroo

- Alfred Berg

- Bastian Orthmann

- Sabina Nordell

- info

- people

- attachment close

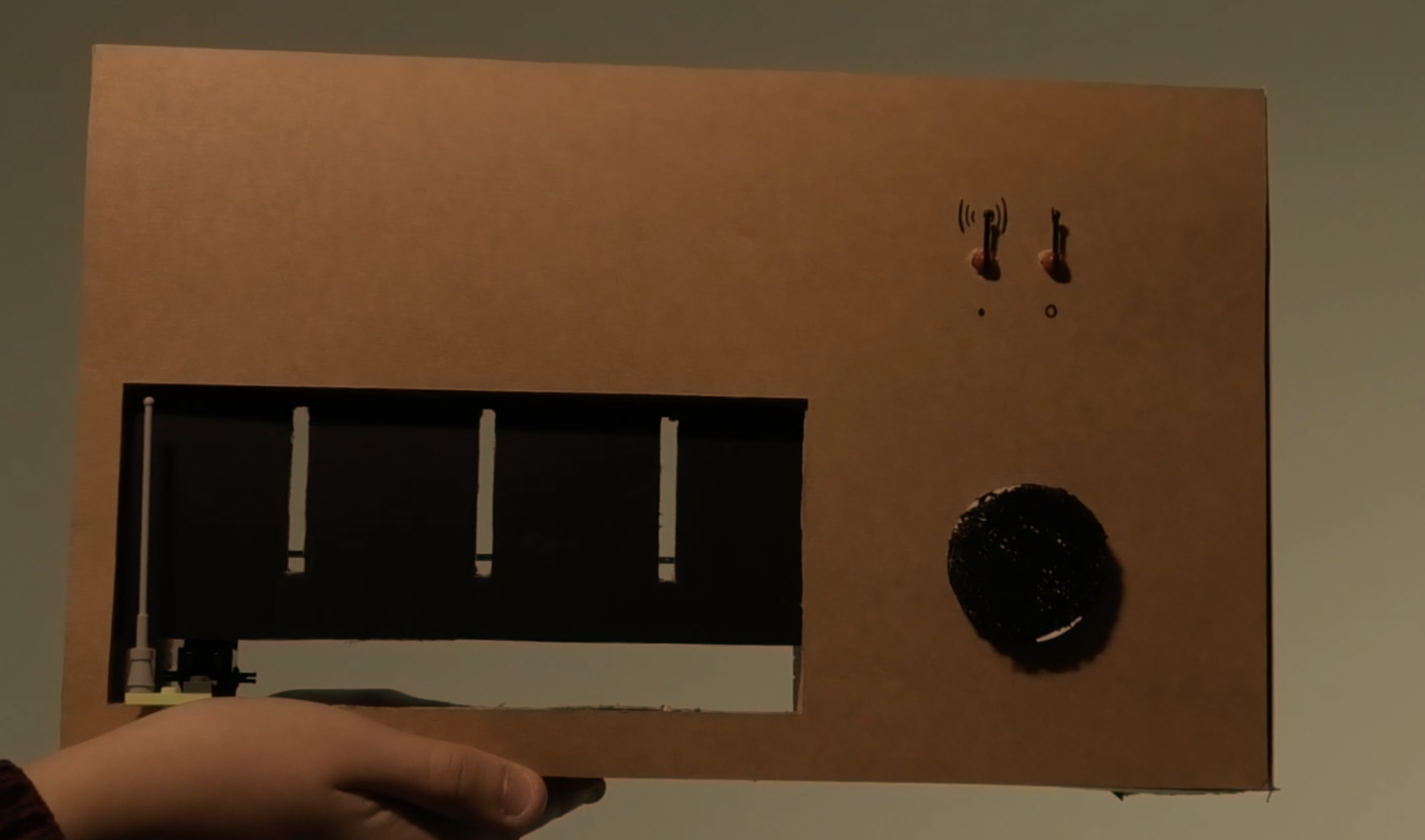

In this project, after extensive requirement elicitation by interviewing potential target users, we designed a device that allows people to keep in touch with their friends and families while carrying out their daily activities at home, especially cooking. It is meant to address those young users who enjoy cooking with their close ones often but rarely have the opportunity to, as a replacement for more traditional ways of communication. By applying the principles of the so-called "slow technology", that implies the lack of displays and advanced interaction beyound the scope of the product, Luma gives users the possibility of setting their current status from "unavailable" to "available for chatting" – therefore becoming reachable from other contacts – and tune into a specific channel to find out if any friend, colleague or family member is available as well. The product, which resembles a retro-looking radio, was both 3D modelled and lo-fi prototyped using commonly available materials in order to test whether the requirements were met. Qualitative user studies were performed at every stage of the design process, and at the same time user personas along with usage scenarios were elaborated to validate the idea.

- Alessandro Iop

- Abhilash Arun

- Alfred Berg

- Elise Reichardt

- info

- people

- attachment close

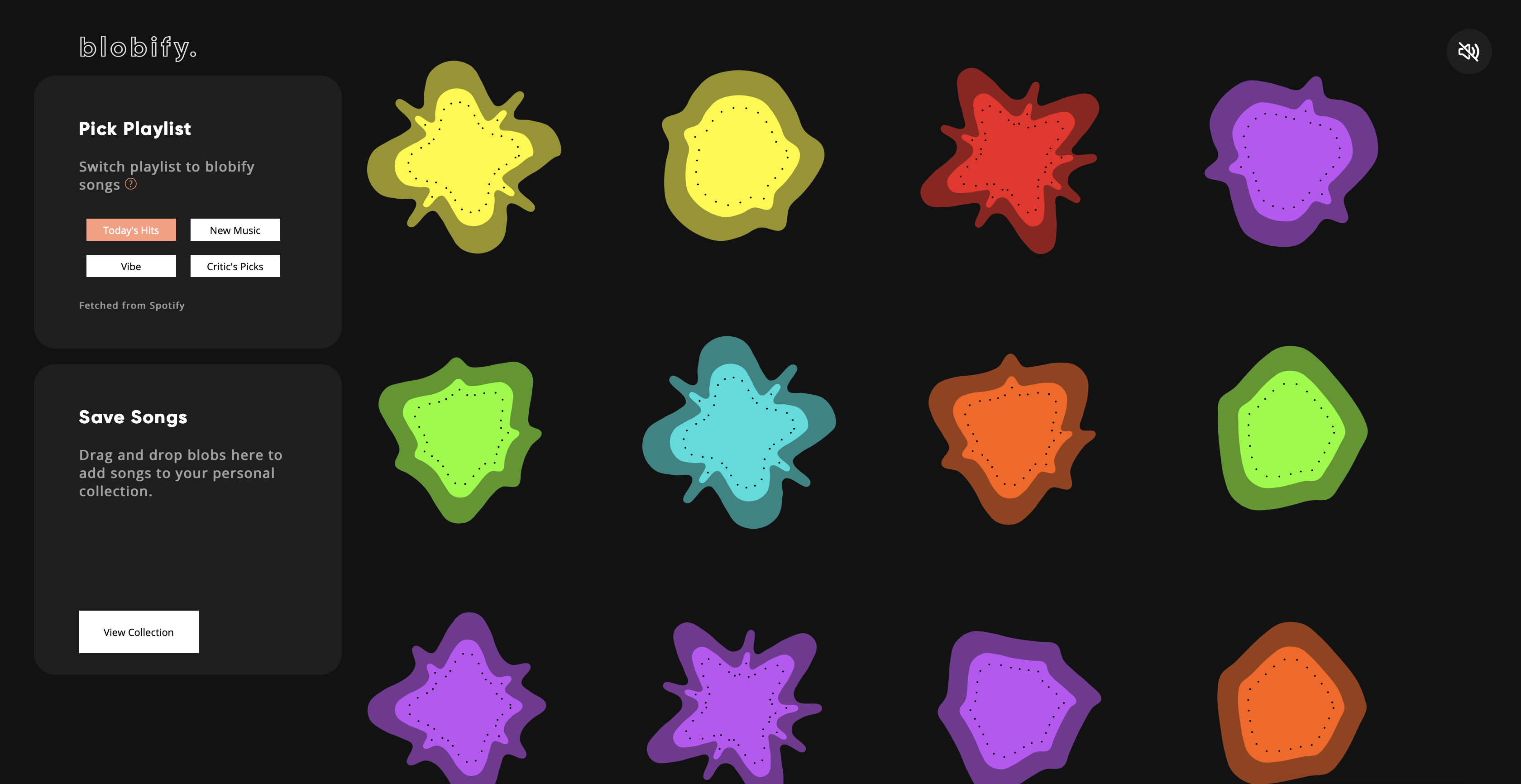

In this project, we developed a Spotify-powered web application for exploring music playlists through a visual representation of the song's acoustic features. In particular, songs are presented as 2D animated blobs whose attributes depend on their energy, tempo and key. Dragging a blob and dropping it in a specific area of the screen adds it to a custom collection, where all the user's favorite songs are stored. Upon opening up the collection, they are presented as a table with different attributes. The application, developed in React-redux, follows an MVC pattern with functional components distinguished into containers and presentational. The back end was developed using Firestore, while at the front end the Spotify API was emplyed along with two.js for the implementation of the blobs, pressure.js for enabling force touch, hotjar and videoask for user evaluation through heatmaps and questionnaires.

- Alessandro Iop

- Sabina Nordell

- Karolin Valaszkai

- Daniel Parhizgar